While competitors trap you in cloud ecosystems or demand expensive hardware, our technology delivers true sovereignty, radical efficiency, and breakthrough perception. Reimagine industrial AI: deploy machine intelligence directly from your CAD files to low-cost edge devices – with zero cloud dependency, no $10k accelerators, and uncompromised data ownership.

Your Unmatched Edge

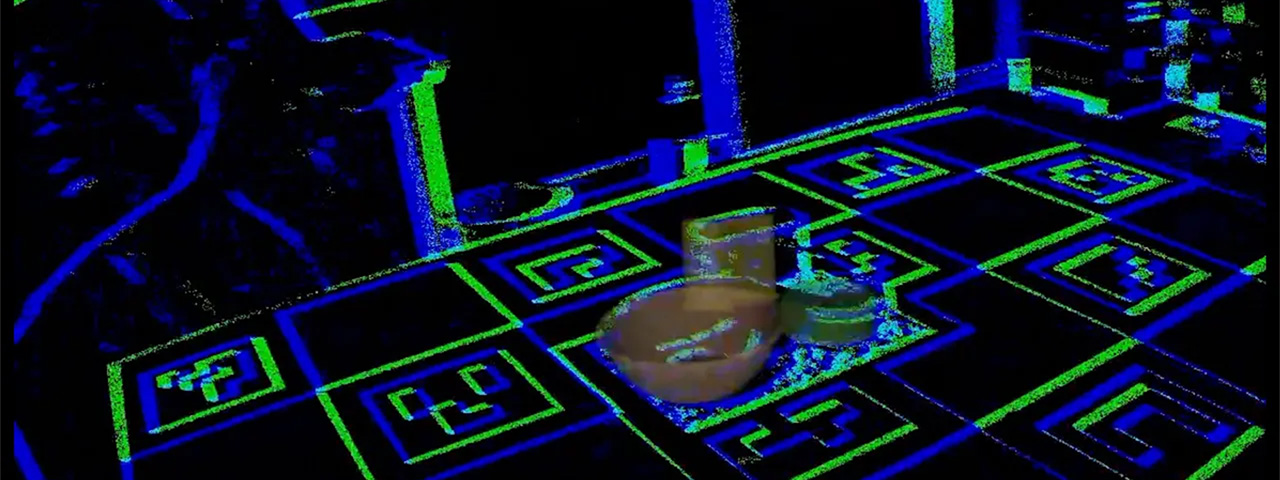

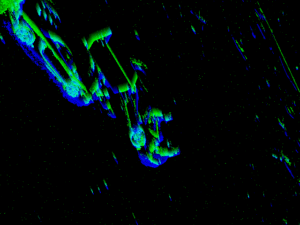

We guarantee end-to-end sovereignty: your CAD data, training, and deployment stay entirely on your infrastructure, eliminating cloud lock-in. Achieve radical cost efficiency by running models on affordable hardware at a fraction of competitors’ power consumption. Most powerfully, leverage our event vision engine to unlock previously impossible environments – flawless operation in total darkness, extreme motion, and ultra-low-power (<1W) settings where conventional solutions fail.

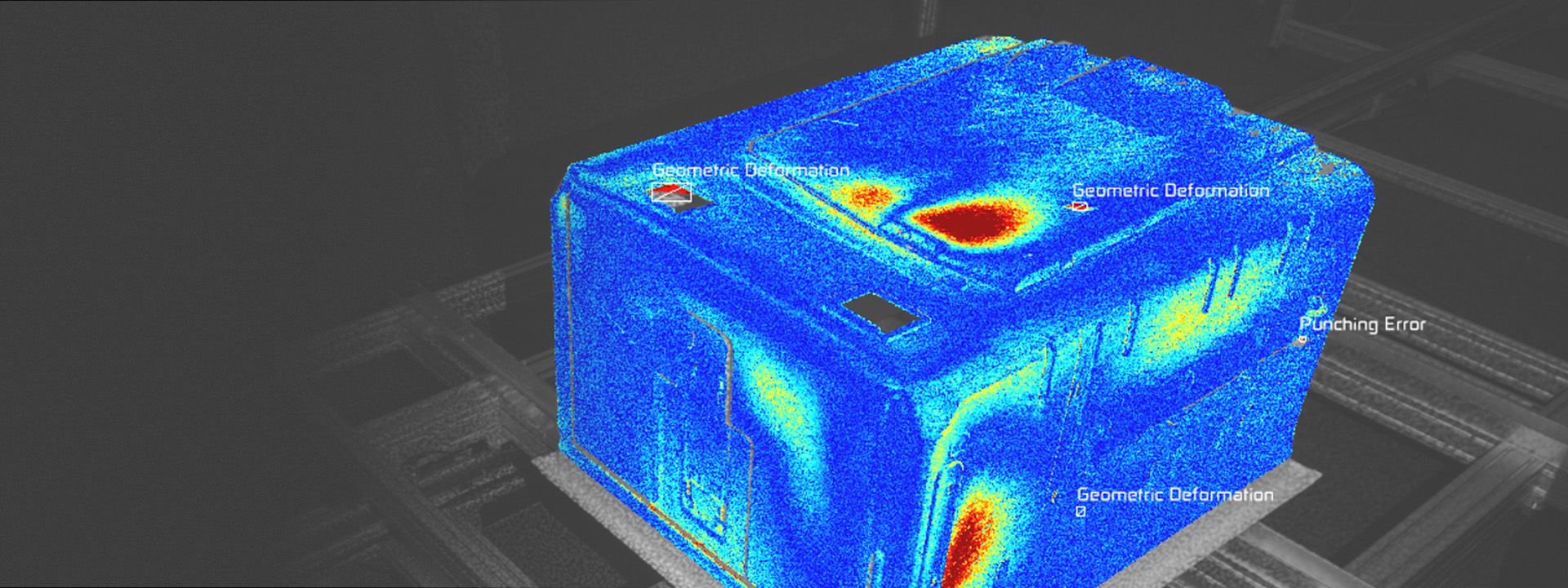

How We Redefine Industrial AI

Transform CAD files into intelligent edge systems using physics-based rendering and automated synthesis. Train hardware-aware 2D/6D detection models that run locally without costly upgrades. Finally, harness event cameras to process sparse data streams for superior performance in conditions others cannot touch.

The full story

Watch our webinar for more details on our training pipelines and the challenges we solve along the way!