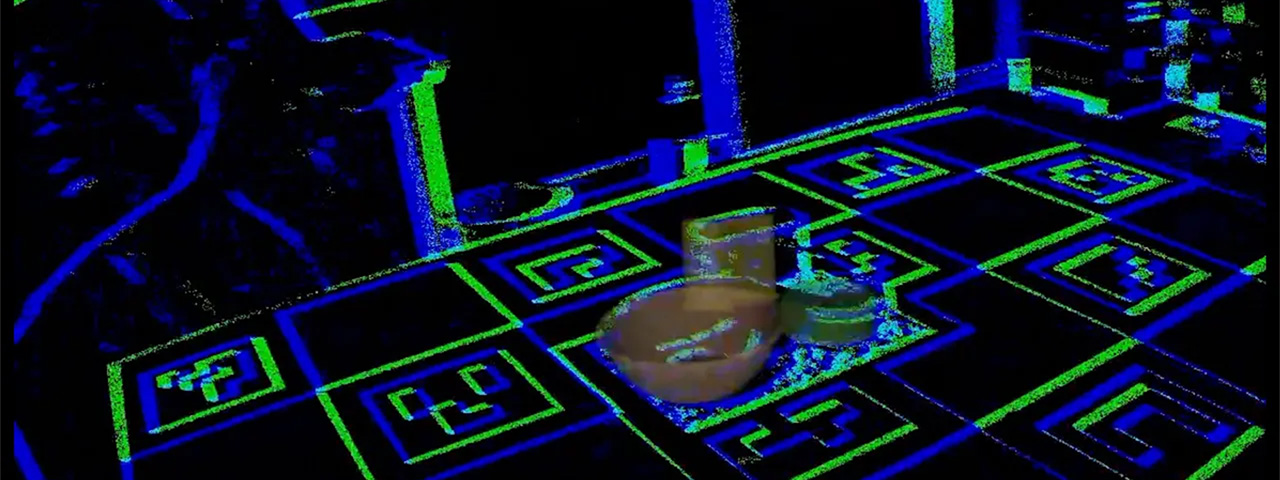

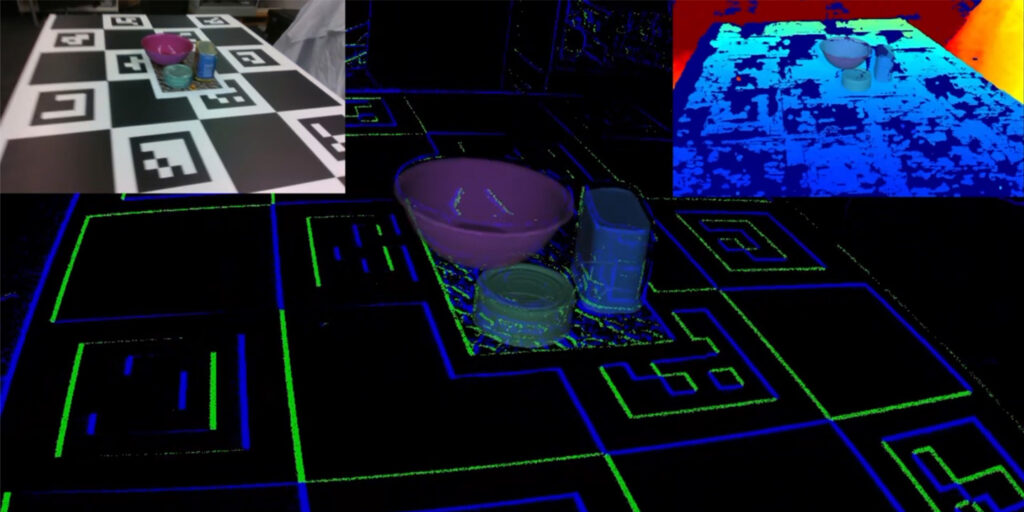

“Event-based Vision” is an exciting new method in computer vision, drawing inspiration from human visual perception. Unlike conventional techniques that process sequences of individual images, event-based vision focuses on identifying distinct visual events triggered by changes in scene brightness. This approach enables more energy-efficient processing and reduces latency in managing visual data.

We are developing algorithms for event-based object pose estimation, useful in applications such as autonomous vehicles, robotics, and augmented reality. By leveraging recent machine learning and computer vision advancements, we use synthetic training without manual labeling. Our models are efficient for deployment on edge devices like the Nvidia Jetson Orin Nano, exploring event-based vision’s benefits in real-world scenarios.

Benefits

By leveraging event-based vision for monitoring on edge devices, users benefit from:

- Extended Operation: Low power consumption allows continuous monitoring without frequent battery changes or high energy costs.

- Real-Time Alerts: Fast detection of rapid movements, such as drones, ensures timely responses to potential threats.

- Cost-Effective Deployment: Efficient processing reduces the need for expensive hardware and cloud-based resources.

- Enhanced Reliability: Event-based algorithms focus only on significant visual changes, minimizing false alarms and improving the accuracy of urgent notifications.